Introduction to Color Spaces in Video

What is color space?

Color space is a mathematical representation of a range of colors. When referring to video, many people use the term “color space” when actually referring to the “color model.” Some common color models include RGB, YUV 4:4:4, YUV 4:2:2, and YUV 4:2:0. This page aims to explain the representation of color in a video setting while outlining the differences between common color models.

How are colors represented digitally?

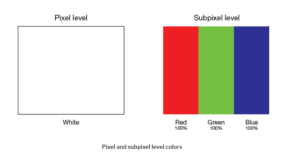

Virtually all displays—whether TV, smartphone, monitor, or otherwise—start by displaying colors at the same level: the pixel. The pixel is a small component capable of displaying any single color at a time. Pixels are like tiles on a mosaic, with each pixel represents a single sample of a larger image. When properly aligned and illuminated, they can collectively be presented as a complex image to a viewer.

While the human eye perceives each pixel as a single color, every pixel is actually made up of the combination of three subpixels colored red, green, and blue.

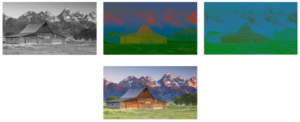

Pixel representation of a sample of a larger image

While the human eye perceives each pixel as a single color, every pixel is actually made up of the combination of three subpixels colored red, green, and blue.

By combining these subpixels in different ratios, different colors can be obtained.

RGB color space

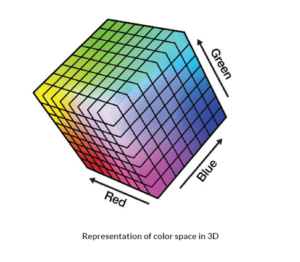

By mixing red, green, and blue, it’s possible to obtain a wide spectrum of colors. This is referred to as RGB additive mixing.

The color space itself is a mathematical representation of a range of colors:

8-bit vs 10-bit color

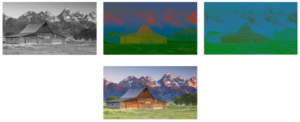

YUV or YCbCr color space

YUV color space was invented as a broadcast solution to send color information through channels built for monochrome signals. Color is incorporated to a monochrome signal by combining the monochrome signal (also called brightness, luminance, or luma, and represented by the Y symbol), with two chrominance signals (also called chroma and represented by UV or CbCr symbols). This allows for full color definition and image quality on the receiving end of the transmission.

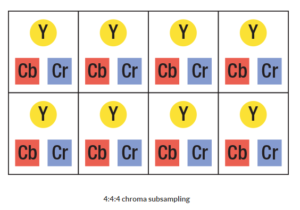

Storing or transferring video over IP can be taxing on network infrastructure. Chroma subsampling is a way to represent this video at a fraction of the original bandwidth, therefore reducing the strain on the network. This takes advantage of the human eye’s sensitivity to brightness as opposed to color. By reducing the detail required in the color information, video can be transferred at a lower bitrate in a way that’s barely noticeable to the viewers.

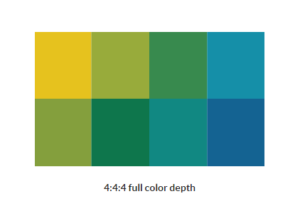

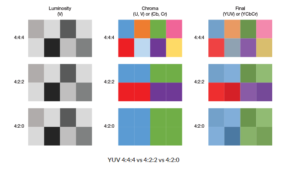

YUV 4:4:4

Full color depth is usually referred to as 4:4:4. The first number indicates that there are four pixels across, the second indicates that there are four unique colors, and the third indicates that there are four changes in color for the second row. These numbers are unrelated to the size of individual pixels.

Each pixel then receives three signals, one luma (brightness) component represented by Y, and two color difference components known as chroma represented by Cr (U) and Cb (V).

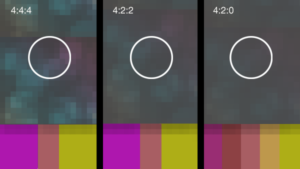

YUV subsampling

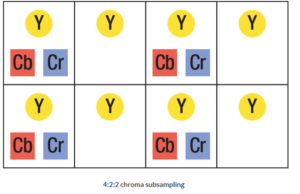

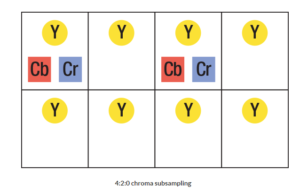

YUV 4:2:2 vs. 4:2:0

The chroma components from pixels one, three, five, and seven will be shared with pixels two, four, six, and eight respectively. This reduces the overall image bandwidth by 33%.

Similarly, in 4:2:0 sub-sampling, the chroma components are sampled at a fourth of the frequency of the luma.

Monochrome

Since most displays are black by default, the simplest way to portray an image is by brightness only. This is known as a monochromatic image:

In such cases, the incoming signal will only have a luma (Y) component, and no chroma components (U or V).

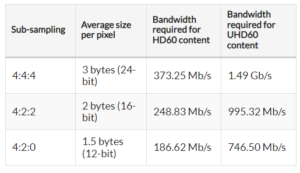

Subsampling size saving

With 8 bits per component,

- In 4:4:4, each pixel will require three bytes of data (since all three components are sent per pixel).

- In 4:2:2, every two pixels will have four bytes of data. This gives an average1 of two bytes per pixel (33% bandwidth reduction).

- In 4:2:0, every four pixels will have six bytes of data. This gives an average of 1.5 bytes per pixel (50% bandwidth reduction).

When to use chroma subsampling and when to avoid?

Chroma sub-sampling 4:4:4 vs 4:2:2 vs 4:2:0

Color space conversion

It’s possible to convert between RGB and YUV. Converting to YUV and using subsampling when appropriate will help reduce the bandwidth required for this transmission.